What HTTP/2 Means for Frontend Development

The release of HTTP/2 in 2015 was a big win for the modern Web. While HTTP/1.1 worked well enough, it was built in an age before full two-way encryption, 1.9 MB web pages, and sub-3 second delivery times. We needed a modern protocol for a modern web, and HTTP/2 promised to deliver.

Of course, moving the entire web to a new protocol isn't easy. We've had 18 years of HTTP/1.1, which means 18 years of legacy applications, frameworks, and development practices. That's a lot of time in terms of software, and now that HTTP/2 has addressed a lot of the older protocol's limitations, a lot of those applications and practices are going to need updating.

In this post, we'll look at the impact HTTP/2 has on frontend development. Keep in mind that HTTP/2 is still only supported by 83% of browsers. If you choose to implement these changes, be sure to check that your visitors will benefit from them.

Domain Sharding

One of the biggest limitations of HTTP/1.1 is the lack of multiplexing. With HTTP/1.1, each resource on a website is downloaded one after the other. This means that a website with 100 files transfers each individual file one-by-one in the order that the browser requests them. Each file won't begin transferring until the files before it finish.

To speed up downloads, browsers began supporting simultaneous downloads from different domains. For example, if a website used the jQuery library, this meant that a browser could download the page's HTML from the origin server while also downloading jQuery from another server, leading to faster page load times.

Domain sharding leveraged this by deliberately moving assets to different domains. HTML could be hosted on one domain while image, CSS, and JavaScript files were hosted on other domains or subdomains. Browsers would open separate connections to each domain and download the files in parallel, bypassing the limitations of HTTP/1.1.

How Does HTTP/2 Fix This?

HTTP/2 natively supports multiplexing over a single connection. The browser opens one TCP connection to the web server for all requests. Domain sharding can actually hurt performance, since each new connection requires a DNS lookup and TCP handshake. With HTTP/2, it's easier and faster to move all assets to the same domain.

Concatenation

The lack of multiplexing in HTTP/1.1 led to the creation of another clever trick called resource concatenation. Resource concatenation is where multiple small files—such as CSS and JavaScript files—are combined into a single larger file. The idea is that the overhead of transferring multiple small files is much greater than that of transferring one larger file. Concatenation is also used to combine images into sprite sheets.

The idea behind concatenation is that the overhead of transferring one slightly larger file is less than that of multiple smaller files. The problem is that this reduces modularity, affecting both cache management and page load time. If a single CSS rule or JavaScript line changes, then the entire file needs to be re-cached. Browsers might also end up downloading code that never gets used.

How Does HTTP/2 Fix This?

By reusing the same connection, multiplexing eliminates much of the overhead of downloading individual files. However, concatenation can still be useful when paired with compression. The cost of compressing very small assets isn't worth the extra processing time, and while some web servers let you set a threshold (in Nginx, this defaults to 20 bytes or smaller), concatenating some of these files could help reduce their size.

Inlining

Like concatenation, resource inlining is another trick meant to reduce the number of downloads. Inlining embeds external resources directly into a web page's HTML. For example, JavaScript and CSS files become <script> and <style> blocks respectively, and images are Base64-encoded directly into image tags.

Normally, stylesheets and images are linked to in HTML documents like this:

<link rel="stylesheet" type="text/css" href="css/img.css" /> … <img src="img/image.png" alt="My Image" />

After inlining, the contents of these resources appear directly in the HTML document:

<style type="text/css">

img { border-radius: 8px }

</style>

<img src="data:image/png;base64, SGVsbG8gd29ybGQhCg…" alt="My Image" />

While inlining reduces the number of files being transferred, it drastically increases the size of the base HTML document. It also prevents browsers from caching resources, since those resources are now a part of the web page.

How Does HTTP/2 Fix This?

Inlining counteracts one of HTTP/2's strongest features by simultaneously increasing the size of the HTML document and reducing the number of files to multiplex. Normally a browser would download the HTML, image, and stylesheet in parallel, but with inlining the browser must download all three as a single file. Inlining not only lets browsers multiplex, but it also lets browsers and proxy servers cache external resources for faster delivery.

Performance Comparison

We ran a benchmark to compare the difference between HTTP/1.1 with frontend optimizations and HTTP/2 with no optimizations. We set up this test using three different configurations:

- HTTP/1.1 with no optimizations

- HTTP/1.1 with domain sharding for images and inlining for JS and CSS

- HTTP/2 with no optimizations

Each of these websites was hosted on a f1-micro Google Compute Engine instance running Nginx 1.10.3. We used the default Nginx settings with the exception of TLS. Firefox and Chrome require TLS with HTTP/2, so in order to negate any performance impact we enabled it for all three servers.

For the site's content, we used the Space Science website template from Free Website Templates. We used Browsertime 3.0.13 to collect performance statistics. For each of the following metrics, we display the mean value (in milliseconds) calculated by Sitespeed.io:

- FirstPaint: The time until the browser first starts rendering the page.

- BackEndTime: The time required for the server to generate and start sending the HTML.

- ServerResponseTime: The time required for the server to send the response.

- PageLoadTime: The time required for the page to load, from the initial request to load completion in the browser.

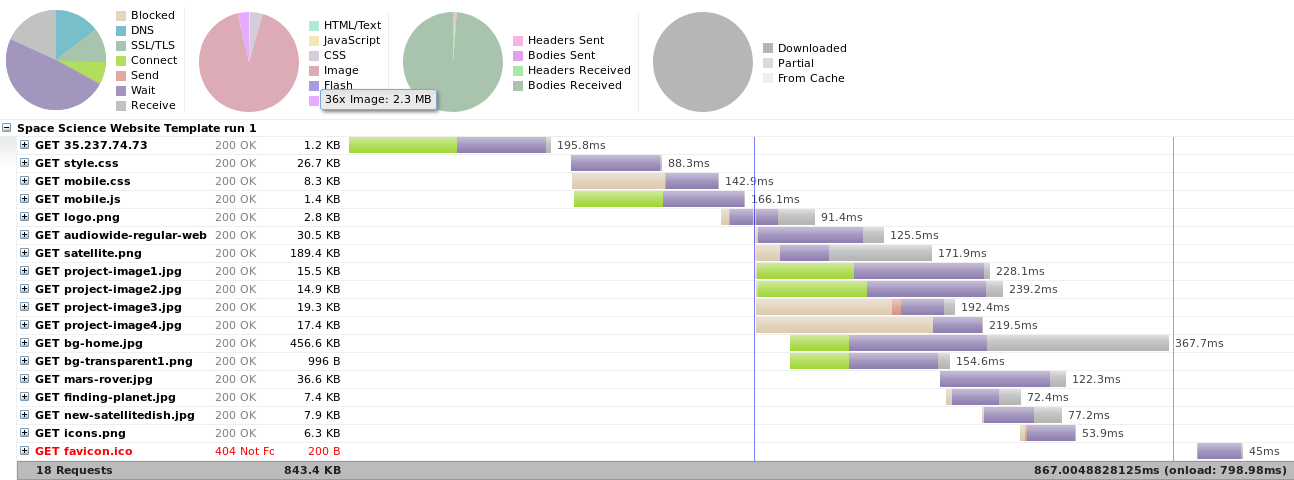

HTTP/1.1 Without Optimizations

Results Table

|

Metric |

Test 1 |

Test 2 |

Test 3 |

Average |

|

FirstPaint |

469 |

491 |

463 |

474 |

|

BackEndTime |

205 |

184 |

188 |

192 |

|

ServerResponseTime |

90 |

84 |

95 |

90 |

|

PageLoadTime |

810 |

712 |

833 |

785 |

Timeline

Unsurprisingly, no optimization resulted in the longest page load time. Resources spend most of their time blocked while other resources finish downloading, giving the timeline much more of a waterfall shape.

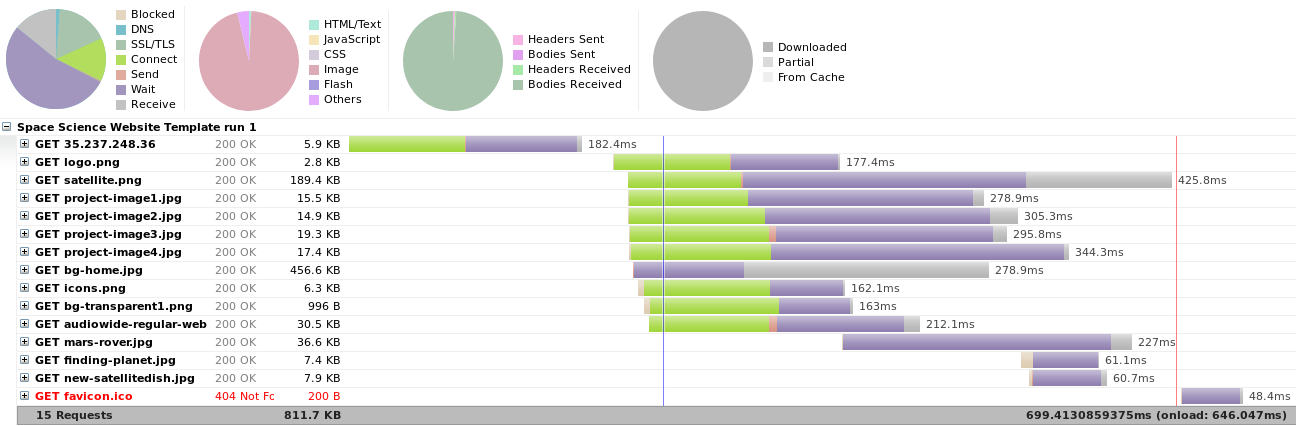

HTTP/1.1 With Optimizations

Results Table

|

Metric |

Test 1 |

Test 2 |

Test 3 |

Average |

|

FirstPaint |

359 |

336 |

341 |

345 |

|

BackEndTime |

181 |

189 |

200 |

190 |

|

ServerResponseTime |

91 |

76 |

95 |

87 |

|

PageLoadTime |

647 |

586 |

613 |

615 |

Timeline

Using concatenation, inlining, and domain sharding resulted in much faster download times, saving nearly 100ms on first paint and 170ms on the page load time.

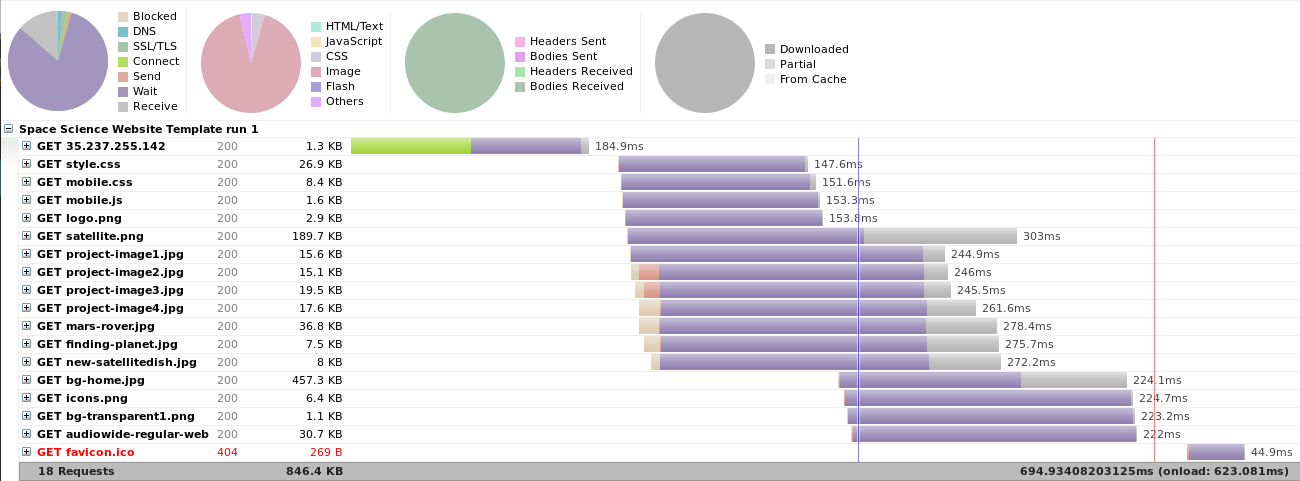

HTTP/2 Without Optimizations

Results Table

|

Metric |

Test 1 |

Test 2 |

Test 3 |

Average |

|

FirstPaint |

488 |

477 |

444 |

470 |

|

BackEndTime |

184 |

183 |

181 |

183 |

|

ServerResponseTime |

92 |

86 |

88 |

89 |

|

PageLoadTime |

624 |

641 |

601 |

622 |

Timeline

Although HTTP/2 is slightly slower overall than HTTP/1.1 with optimizations, the savings is in the initial connection time. Instead of creating a connection for each resource, the browser reused its initial connection to download and queue other resources. This results in most resources taking less time to download than HTTP/1.1, even though the total time was slightly longer.

Conclusion

While HTTP/2 fixes a lot of issues with HTTP/1.1, that doesn't mean all frontend development practices are obsolete. Practices such as minification and hosting resources from a Content Delivery Network (CDN) are still recommended for reducing file size and increasing download speeds. HTTP/2 also introduces backend features—such as header compression and server push—that promise to make websites even faster. We suspect that using server push for critical resources would also get the all important FirstPaint time down as far as HTTP/1.1 with frontend optimizations.

You can learn more about HTTP/2 on the HTTP/2 home page.