Optimizing Backend Operations with Fragment Caching

When you think of web caching, you might picture a content delivery network (CDN), browser cache, or reverse proxy. These types of caches help deliver web pages and resources to visitors faster. But while these work great for static content, content that changes on each visit can be difficult or even impossible to cache, meaning your origin server has to do more work and respond to more requests.

Although dynamic content can't always be cached on the network, parts of it can be cached on the server itself. This is known as fragment caching, and it can go a long way towards improving your web application's performance.

What is Fragment Caching?

Fragment caching (also known as object caching) involves caching the results of an operation done in a web application. Whenever the application needs to repeat this operation, it instead pulls the results directly from the cache. It's the same idea as a network cache, only it applies to your backend services instead of your frontend users.

For example, imagine you host a basic blog on your server. When a user loads the front page, the application retrieves a list of posts from the database. If another user visits the front page, the application makes another call to the database even if the post list hasn't changed, essentially duplicating the processing time for the same results.

Usually this wouldn't be an issue thanks to network caching, but what happens when you want to personalize your front page for each user? Should you cache every possible front page configuration, or should you just let your server regenerate the entire page for each visit? Fragment caching creates a middle ground by letting you reuse dynamic content while reducing the workload on your servers.

How Do I Implement Fragment Caching?

While there are many ways to implement fragment caching, one of the most popular is through Memcached. Memcached is a generic object caching system for multiple languages and frameworks. It lets you store objects as key-value pairs in a shared memory pool, which you can access from different processes.

Take the blog example from the previous section. When a user visits the front page, a database query retrieves a list of the most recent posts. Normally this query runs each time the front page is refreshed regardless of whether the list changed. With Memcached, you can store the results of this query and access it directly from memory instead of having to connect to the database. You can also set expiration times and granular control policies for each cached object.

Example

In this example, we'll use Memcached to store the results of a database query in a PHP application. First, we'll try to retrieve the data set from Memcached. If Memcached returns nothing, it either means the data hasn't been cached or the cached version has expired. In that case, we'll re-run the query and reset the cache before continuing. For the full code, see the Benchmarking section below.

<?php

// Set up the database connection and assign it to $db

// Connect to a local Memcached server

$cache = new Memcached();

$cache->addServer("localhost", 11211);

// $key identifies the key-value pair in Memcached containing the data set

$key = "query-results";

$result = $cache->get($key);

if (!$result) {

// Re-run the database query

$results = $query->fetchAll();

$cache->set($key, $results);

}

// Render $results

?>

Benchmarking Memcached

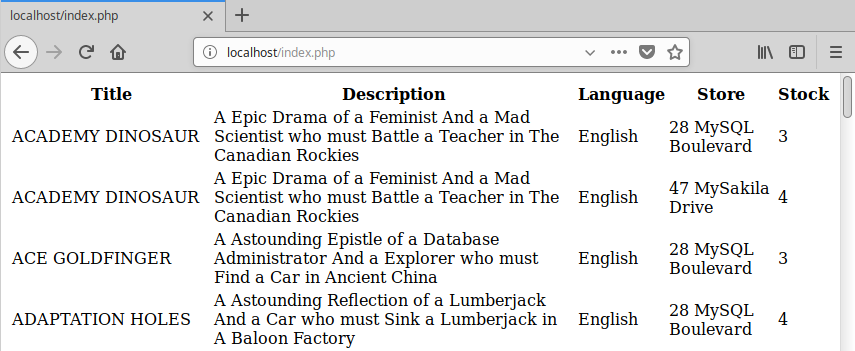

To see the impact fragment caching can have on performance, we created a simple PHP application connected to a MySQL database. The application is an inventory tracking system for video rental store based on the MySQL Sakila sample database. When a user loads the page, PHP runs a query that retrieves each video in the system, the store it belongs to, and the number of copies currently in stock. We created two separate scripts: one that stores the results of the query in Memcached, and one that re-runs the query each time.

How the site appears in the browser

Both scripts were hosted on an f1-micro Google Compute Engine instance running Apache 2.4.25, PHP 7.0.27, and MariaDB 10.1.26. To test the site, we used version 7.0.3 of the Sitespeed.io Docker container with Chrome. For each of the following metrics, we measured the mean value (in milliseconds) calculated by Sitespeed.io:

- FirstPaint: The time until the browser first starts rendering the page.

- BackEndTime: The time required for the server to generate and start sending the HTML.

- ServerResponseTime: The time required for the server to send the response.

To mitigate the effects of the MySQL Query Cache, we ran the query directly on the MySQL server before running either test.

Without Memcached

|

Metric |

Test 1 |

Test 2 |

Test 3 |

Average |

|

FirstPaint |

383 |

312 |

293 |

329 |

|

BackEndTime |

145 |

145 |

133 |

141 |

|

ServerResponseTime |

145 |

144 |

135 |

141 |

Without Memcached, the results were fairly consistent across each test. The increase in performance is likely due to MySQL using its own internal cache to store and retrieve the query results.

With Memcached

|

Metric |

Test 1 |

Test 2 |

Test 3 |

Average |

|

FirstPaint |

278 |

248 |

283 |

270 |

|

BackEndTime |

182 |

131 |

131 |

148 |

|

ServerResponseTime |

174 |

133 |

133 |

147 |

Using Memcached, there's a noticeable increase in processing time during the first run. This is most likely due to the initial connection to Memcached as well as storing the results of the query. The second and third runs, both of which reuse the Memcached connection, outspeed all of the cache-free runs. When comparing the second and third runs of the Memcached test to the second and third runs of the cache-free test, Memcached resulted in a performance increase of over 12%.

Conclusion

For sites that make heavy use of dynamic content, fragment caching can offer huge benefits. Besides database data, fragment caching can also store session data, results from heavy computations, or even complete HTML elements. While it may not reduce the number of hits to your servers, it can help your application respond to requests faster.